I was invited to give a performance and demonstration of my motion tracking audio system at Crux Festival 2024 and decided to use it as an opportunity to develop my existing setup into a new motion tracked audio visual performance, including an interactive video element made in MAX using the OpenGL architecture. It was a great opportunity for me to learn about video processing on the graphics card, and devloping custom shaders in MAX that could react to my skeletal movements alongside the audio processing. This is an ever evolving project and I look forward to where it takes me in the future!

Benji Reid - Find Your Eyes - Video System Design and Show Controller

My task on this project was to create a system to project still photographs, captured on stage from a Canon R5 onto two large projection screens in as short a time as possible. I built a custom MAX patch to scan a designated upload folder, analyse the pixel dimensions to determine portrait or landscape before processing in Resolume Arena and going out to two 6 metre projection screens. Having it’s premiere at Manchester International Festival in 2023, ‘Find Your Eyes’ is a stunning show that i’m looking to continue touring with throughout 2024 and 2025

Complicite - Drive Your Plow Over the Bones of the Dead - Video Design Associate

I began working with Complicite during the R&D process of ‘Drive Your Plow’ in the autumn of 2023, exploring technical ideas regarding video in the early stages of development. From tinkering around with live camera feeds and MAX/Msp patches during rehearsals, I ended up as an integral part of the video team as it moved into production and began touring in 2023.

Array Infinitive - Leslie Deere

After working on a ‘Modern Conjuring for Amateurs’ with Leslie Deere back in 2016, I was invited back to help develop her new piece ‘Array Infinitive’. I was brought on board to develop a gestural interface for a new audio visual performance, aimed at creating a meditative, communal space in VR, with reactive audio and video elements controlled by Leslie in real time.

Here we took realtime control data from the hand controllers of the Oculus Quest system into Max, where i developed the interaction design, in turn driving changes and manipulating audio parameters inside Ableton Live, for the sound design developed by myself and Leslie.

This resulted in an audio visual performance instrument that Leslie would be able to learn like and instrument and perform to a group of participants wearing their own VR headsets.

Kinect Body Instruments - Boss Kite @ More Kicks Than Friends

2020 saw me sink my teeth back into my kinect body instruments project having the opportunity to perform on a few online platforms. The system has had a bit of an overhaul as I’ve been trying to create a patch to use across a whole performance, rather than thinking of the tracking camera as a control for individual instruments. The system uses a kinect 2.0 to track my limbs, convert joint locations in space to MIDI data, and from there control a whole host of samplers, synthesizers and FX parameters inside of Ableton Live.

Soundworlds - Ambisonic Podcasts

During the pandemic of 2020 director Patrick Eakin Young approached me to help transform a number of current and future productions into audio drama / podcast format or…. SONIC THEATRE! Beginning with stems from a live recording of a piece already toured, I was tasked with mixing and mastering for podcast formats.

We decided to mix each piece in ambisonics, a form of mixing often described as 360 audio, when rendered though a binaural encoder, posibilites of audio spatialisation are greatly enhanced compared with traditional stereo mixdowns. This enabled us to be much more creative with the mix process, transporting the listener to different ‘locations’ and creating a much more accurate sense of space.

Stay updated for more episodes in the future at soundworlds.org

Uninvited Guests - To Those Born Later

Uninvited Guests traveled the country to create a digital record of our time, making a collection of things that matter to you, to keep in a time capsule that will be opened in 150 years.

What image of our world should the people of the future see?

What would you want to go down in history?

What do you want to be remembered?

I was brought on board during the second stage of development for this project to design the sound, streamline the technical system and act as technical manager whilst on tour. The project involved an incredibly technical and bespoke setup, devloped by the team at Fenyce Workspace, involving audience uploading content, web scraping to download audience media during the show, and an adaptive soundtrack provided and sourced in real time by myself during each show from audience suggestions submitted digitally.

Atomic 50 - Immersive Performance Workshop for Young People

Commisioned by Walthem Forest Council to celebate the history of tin manufacturing in the Leyton area of London, ‘Atomic 50’ is part performance, part interactive workshop for young people, transforming a disused school building in Leyton Sports Ground, as a ‘ghost’ factory that returns when there’s a need to revive the importance of metalworking skills.

I was approached to design the sound and composition, installing over 50 speakers, bespoke playback devices and triggers across multiple rooms. The showpiece for me was a ‘singing sculpture’, where young people could record their thoughts and feelings, with the recordings being fed back into the sculpture, mixing with a composition in realtime to contribute to the audio installation that changed and evolved with each performance.

BORDER - A VR RIDE - Spatial Audio for VR

BORDER is a virtual reality event and documentary. The show takes its audience on a motorbike journey through dramatic scenery that has provoked debate, conflict, poetry and questions of national identity. Using 360 filming technology, this thrilling experience celebrates the communities and people of Northern Ireland.

Working with long term collaborator Abigail Conway, I was invited onto the project to provide sound design and composition for a VR / 360 film documenting a motorcycle journey along the Irish / Northern Irish border.

This was my first opportunity to work with VR / 360 film so decided to develop the soundtrack in Reaper using the FB360 Spatial Workstation to deliver the final audio in an ambisonic format to allow for the audio to be ‘headtracked’ in the 360 film. The meant that audio coul be tied visual elements of the film, so as the viewer turns their head, audio components move along with the perspective of the viewer to create a realistic and immersive audio expereince to accompany the film.

‘BORDER - A VR RIDE’ was shown at the Art 50 Openfest festival at the Barbican on the 23rd February 2019.

Erratica - Toujours et Pres de Moi

I was invited back to work with Erratica this summer to design the sound for their show ‘Toujours et Pres de Moi’ which went up to the Edinburgh Fringe Festival 2018. The show consists of two actors on stage interacting and setting the stage for two further characters represented in a holographic video. Using a huge reflective screen angled at 45 degrees to the audience, the effect of two miniature characters dancing and interacting with real world objects on a large wooden table is incredibly realistic and somewhat eerie.

It was a particularly interesting challenge for me as a sound designer as much of the design was tied to the video content, but was also spatialised across an eight channel sound system in the theatre. This led to me being able to experiment with lots of shifting of perspectives, drawing the audience close into the world of the projected characters with small speakers installed underneath the table, and expanding back out again into the auditorium with use of rear stage an surround speakers.

Dreamthinkspeak - One Day, Maybe

I was invited by Dreamthinkspeak’s Tristan Sharps to design and install the sound for their large scale site responsive performance as part of Hull 2017 city of culture. The installation spanned four floors of a derelict office building where participants were guided through Kasang headquarters, a fictional company specialising in smart technologies. The sound install comprised over one hundred speakers, bespoke playback devices and custom control software developed in MAX to enable flexible control of audio playback in a truly unique performance scenario.

You can find more information about the project from the link below

https://www.hulldailymail.co.uk/whats-on/whats-on-news/take-mazes-try-out-amazing-441382

For Amusement Purposes Only

Turns out someone had a bonkers idea to turn a pinball table into an interactive performance. That person was Erratica, a London based arts company used to making music and theatre performances with a heavy interest in technology. I was brought on board to create a generative music patch, taking trigger inputs from every conceivable flipper, bumper, button, ramp (and the mouth of a four inch talking clown).

In collaboration with Patrick Eakin Young (Erratica artistic director) and Matt Rogers (composer) I built the compositional interface using MAX, that sat between the pinball table and Matt who would be using it to compose an interactive score. We had a three week residency to begin our researh, partnering with Rambert Dance Company to explore exactly what the pinball machine was capable of, and how we could take advantage of it as best we could.

House of Healing - ZU-UK / Barcu Festival Bogota

In October 2016 I was invited as both sound designer and workshop leader to join ZU-UK theatre to create an immersive theatre installation for Barcu Festival in Bogota Columbia. We were to work with local Columbian artists over two weeks to create a multi media performance, utilising the broad range of skills brought forward by the group which included visual artists, video makers, performers and designers.

The piece took the concept of 'house of healing' as a starting point which ended up being subverted in a way to make the audience think about their own ideas of healing within the setting of a converted children's home. For the technological side of things we used binaural audio, a VR experience, projection mapping and a silent disco quiz to help lead the audience through the piece.

Modern Conjuring for Amateurs - Leslie Deere

My gesture control system saw it's first public outing this June in the form of 'Modern Conjuring for Amateurs', a performance piece devised by Leslie Deere in collaboration with myself and Tim Murray Browne as part of Whitstable Biennale Festival. The piece combined Leslie's background in movement and sonic arts, with my software system to create an intimate performance conjuring up sound and projected image through movement and gesture. I took on the role of technical collaborator, providing the software system that translated her movements into sound modulation throughout the performance. The piece was supported by Sound and Music and Music Hackspace.

Sound Design and Workshop Leader - Young Roots Anarchy

This spring I embarked on a young people's project with the aim to create a series of theatre performances and installations with three colleges from Hampshire for Winchester Hat Fair Festival. With Wet Picnic artistic director Matt Feerick, we chose to look at creating binaural sound pieces, combined with live performance to explore our stimulus of the English Civil War in and around the Winchester area. This technique has become popular in recent years, particularly with the recent Complicite production 'The Encounter' using binaural recording to build and enhance storytelling.

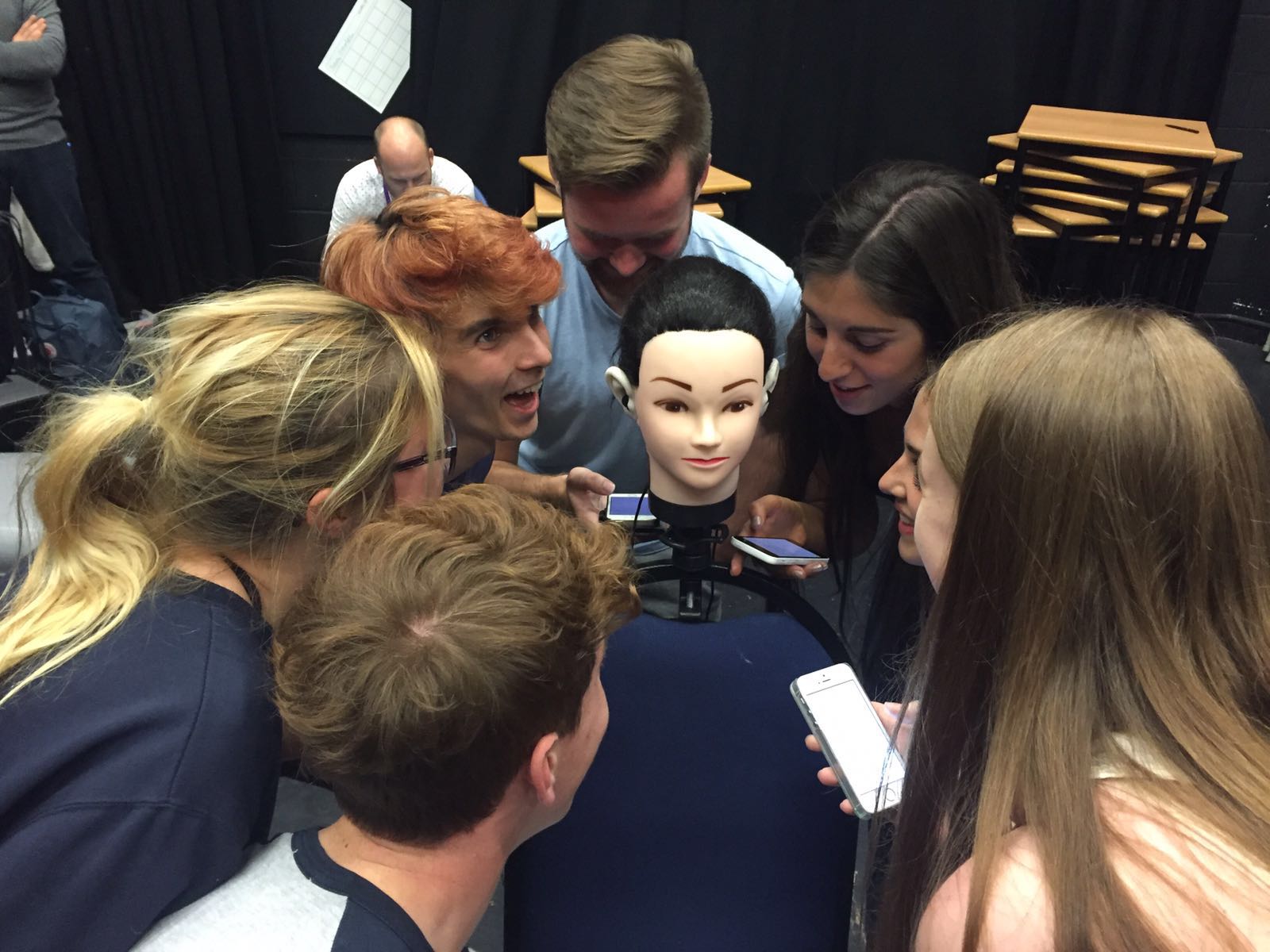

I decided to create my own binaural microphone dummy head for the project which we used to create 3D sound worlds to accompany each performance. By using binaural recording techniques we explored different ways that text could be delivered to the audience. This can be particularly effective when recordings are made at various distances from the microphone. It was particularly effective for Barton Peveril College's group performance which looked at propaganda, where comments could be whispered secretively into the audiences ears

Kinect Body Instruments - Lecture Demonstration at Point Blank Studios

Over the last few years I've been developing an interactive music system, taking movement data from the microsoft Kinect camera to create and modulate sound in real time for performance and sound creation from movement of the body. I was invited down to Point Blank Music Studios in London to talk at their Ableton User Group Meet Up about how I integrate the system using MAX and Ableton Live .

Artistic Residency - G.A.S Station / ZU-UK

I was luck enough to be invited down to G.A.S. Station for a week in January 2016 as an artistic resident, giving me the space, time and resources to help me explore my own practice. The space is run by the wonderful ZU-UK theatre, who's notable work includes 'Hotel Madae'. I took the opportunity to invite down a host of collaborators across the week to help explore how interactive technologies could be used in collaboration across different art forms.

The Iliad - British Museum / Almeida Theatre

I was excited to be asked to support the production team through a dusk til dawn reading of Homer's 'The Iliad' in the iconic setting of the British Museum. Hosted by Almeida Theatre With a cast of over 50 actors including Simon Callow, Brian Cox and Mark Gattis reading individual sections of the text from start to finish, I was responsible for looking after sound throughout the day, fitting microphones and manning the sound desk during the reading.

The Incandescents - Welcome Collection

I was asked to program an audio responsive light system for Subject to Change's theatre installation 'The Incandescents' as part of the 'On Light' exhibition at the iconic Wellcome Collection in London. I used the software 'VenueMagic' to import audio and create a modulating DMX signal from multiple audio tracks, controlling the voltage sent to a series of incandescent light bulbs to create an instructional workshop experience for the audience.

Motion Control - More Experiments Hacking XBOX Kinect

I've recently had some time to continue developing a system to take data from the Microsoft Kinect camera, to control sound using the human body. I've created a number of 'body instruments' that use different modulation and trigger techniques for controlling and generating sound in real time.

I've also stated using live looping to build music and soundtracks from scratch using my body and a foot controller to trigger presets and recording. I'm interested in taking this technology into dance and theatre, to create close connections between sound and image in a performance setting.